For years, as a creative tech community, we could have taken pride in developing our own tools and codes to “generate” computational outputs and processes, crafting digital universes where we could freely operate as “chief architects”. We could establish rules, manipulate them to yield results that were both known and unexpected. Together with the rules, we have always played with datasets as well: scraping, collecting, arranging are the typical actions for openly utilizing a digital material to craft interactive narratives. From software to hardware, the convergence of arts and design, makers, and open technologies birthed a plethora of empowering creative tools, collectively owned and consistently shared.

In the field of Interaction Design, opening technologies has always been a favored approach because it is one of the youngest design practices wherein creators realized the importance of sketching projects directly with hardware and software, not just with a pencil. From Arduino to Processing and several graphic programming environments, the interaction design community has explored all kinds of tools for creating products, installations, and sentient environments. In doing so, it also focuses on opening the black box of technology through onboarding interfaces.

Designing interactions often involves opening up technologies and making it easier for non-experts to “control” it, sometimes by immersing them in a compelling story. Through buttons, graphical user interfaces, and interactive projects, complex technological concepts have always been unboxed by creating experiences accessible to the masses.

This is a reflection that could arise while interacting with the latest research installation by DotDotDot, titled “Data Bugs – AI is a Mirror.” The installation, in its version 0.1, has been showcased in the new spaces of Dotdotdot, a Milan-based interaction design studio that celebrated its 20th anniversary on the occasion of the Fuorisalone Design Week 2024.

The project is presented as “an immersive emotional installation that makes tangible and experiential the working mechanism of a neural network, demystifying the omnipotence that is too often attributed to AI.”

The studio aimed to leverage what is called Explainable AI approach, to narrate the generative power of AI and reflect on the social implications of data representation and polarization. The reflection mostly focuses on the responsibilities we have in providing data and training a technology that is revolutionizing many industries. As interaction designers, the team at DotDotDot decided to address this topic by creating an imaginary universe with the typical language of a science museum, in which the human society in the age of AI could be associated with the insects society.

Two artificial intelligence models are fed by a variety of insect species. The dataset based on homogeneous information thus produces similar, stereotypical images (e.g. a black or a red ant), while the dataset based on balanced information, which includes the diversity of types found in nature (there are around 14,000 species of ants in the world), allows the creation of results.

The synthetic species generated by the more diverse datasets are almost alienating; they do not conform to what we might expect but are closer to the diversity found in nature. The history of science is highly populated by the cognitive biases of male scientists who were the only people entitled to create non-diverse datasets, which oriented society’s gaze for centuries.

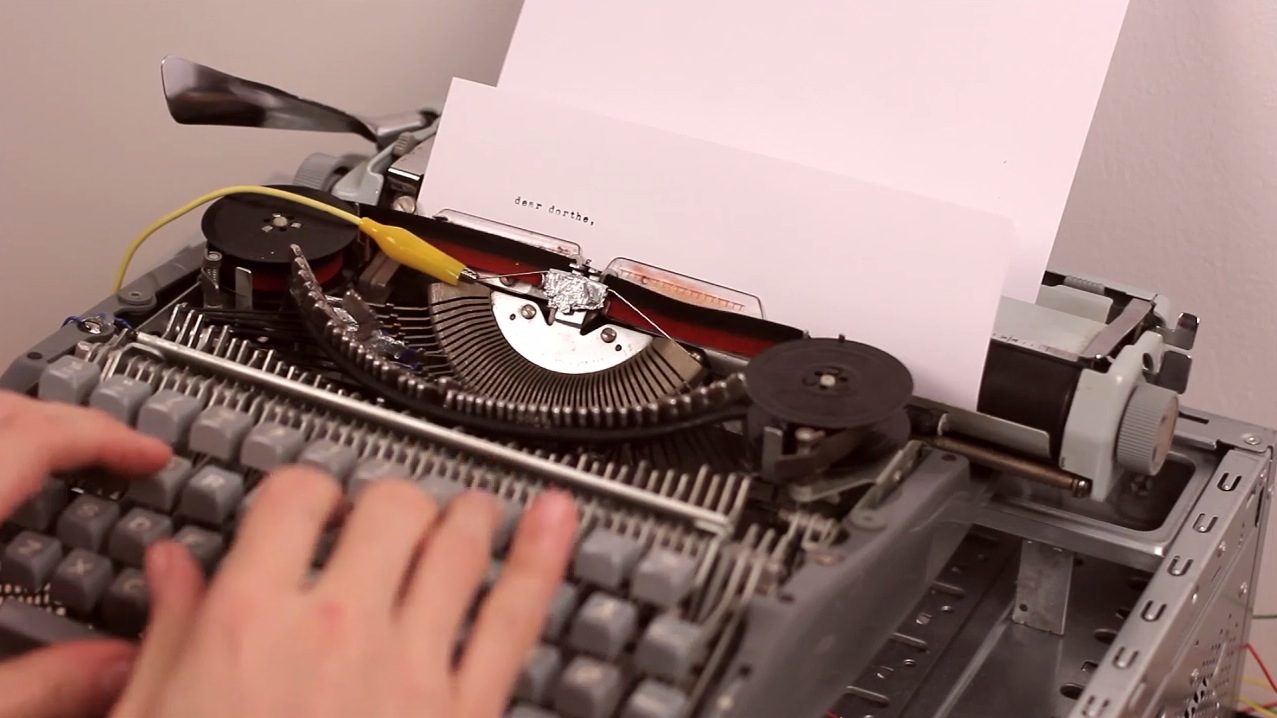

The generative AI displaying the synthetic insects is accompanied in the space by a natural interface that utilizes a motion camera. The interface tracks the visitors’ positions in a way that allows them to understand and control the generative process by selecting certain parameters. Both the visualization (output) and the interface (input) enable the unboxing of the biased results of the AI models. The parameters are technically the factors that influence the model.

In occasion of the studio visit in Milan, I had the chance to ask more questions to Alessandro Masserdotti, a science philosopher by training and interaction designer by experience, and since 2004, the founder and creative technologists of DotDotDot studio.

SC: Why, as interaction designers, you decided to use the metaphor of the insects classification and propose this storytelling? What do you think is the strength of this approach for explaining AI to the public?

AM: We knew we were tackling a theme with various political and ethical implications—the issue of stereotypes and prejudices inherent in our society, which are “carried” into AI models during training. However, since we are neither sociologists nor politicians in the strict sense, we decided to address this theme using the metaphor of the insect society, aiming for reflection and synthesis to occur in the visitor in a mediated manner. We focused on the problem of biases in datasets used for model training phases without directly raising any controversies. As designers, our aim was to raise awareness of this issue, and insects proved perfect for this purpose. Lastly, when a problem is encountered in software, it’s common among developers to say there’s a bug (an insect), hence the birth of DATABUGS.

SC: You stated that this project is the 0.1 of research endeavor. What have you discovered during the process that will guide the next iteration and in generale your practice in the future, in terms of working methods and conceptualizing interactive installations?

AM: For us, as designers who often use technology as a tool, it’s crucial to deeply understand the tools we use, their implications, and impacts on both the stakeholders in our projects and ourselves as designers. We were interested in understanding, as thoroughly as possible, how these new generative AI models work to begin imagining how they could be integrated into our projects. What we discovered is that these tools are still in a fairly experimental phase, albeit rapidly evolving. They still need to be experimented with and developed further. To begin using them to their fullest extent (as is happening at the moment), they would need, at the very least, a user manual with warnings.

SC: While ideating and prototyping the installation did you face the typical process based on the creation of new technological tools and workflows? Did you use open-source tools, generate shareable solutions, or were you merely users of AI black boxes to talk about AI black boxes?

AM: In reality, one of the aims of this installation was to convey that generative AI models are not black boxes. The fact that they are based on statistical and non-deterministic models doesn’t mean we don’t know why they do what they do; it just means we can’t determine the exact outcome. This, from a certain perspective, contributes to their allure. Training a generative AI model from scratch, one that is effective and competent, has unfortunately become an endeavor that a small studio like ours cannot afford. It requires weeks of calculations using entire computer farms consuming several MWh of electricity. Only large companies like Microsoft or Apple, or public university consortia, can afford to invest so much in training a model. Fortunately, not all of these entities keep the results and access to the models closed. For example, we started with a generative AI model called Stable Diffusion, which is open-source. It was created by a university consortium, which used a 5-billion-image dataset also open-source for training. And thanks to the fact that all the source code and models were open-source, we were able to start from these and, in a few weeks, perform fine-tuning to work vertically on what interested us. I would say, based on 20 years of experience, that without the open-source approach and sharing of knowledge, small studios like ours could do very little. Essentially, when we can and don’t have particular contractual constraints, we also try to contribute by releasing our code and solutions in an open manner. However, things haven’t changed much in the last 20 years in the sense that we still witness the clash between a more open approach based on knowledge sharing and an extremely protective and closed one. Although over the years, hybrid approaches are slowly emerging, which are probably the most interesting.

Through Alessandro’s words, it is possible to reflect on the role of creative tech as a way to unbox AI. While interacting with Data Bugs, in fact, we are exposed to a tangible experience of Explainable AI and to a sort of “Explainable Science” that tells more about us but also about how the domain of science and technology has always been a non-objective mirror that historically contributed to create a highly biased representation and interpretation of both nature and society.

Dotdotdot is a multidisciplinary design studio founded in Milan in 2004 and one of Italy’s first to work in the interactive design field. Dotdotdot specializes in Exhibition and Interaction Design — designing museum paths, Corporate Experiences, temporary and permanent multimedia installations. Over the years, it has consolidated expertise and experience in developing and designing digital strategies and customized integrated digital systems for companies, museums, historical archives, working environments, healthcare facilities, and projects dedicated to Smart Living. Research and technological innovation are the basis of all its projects.