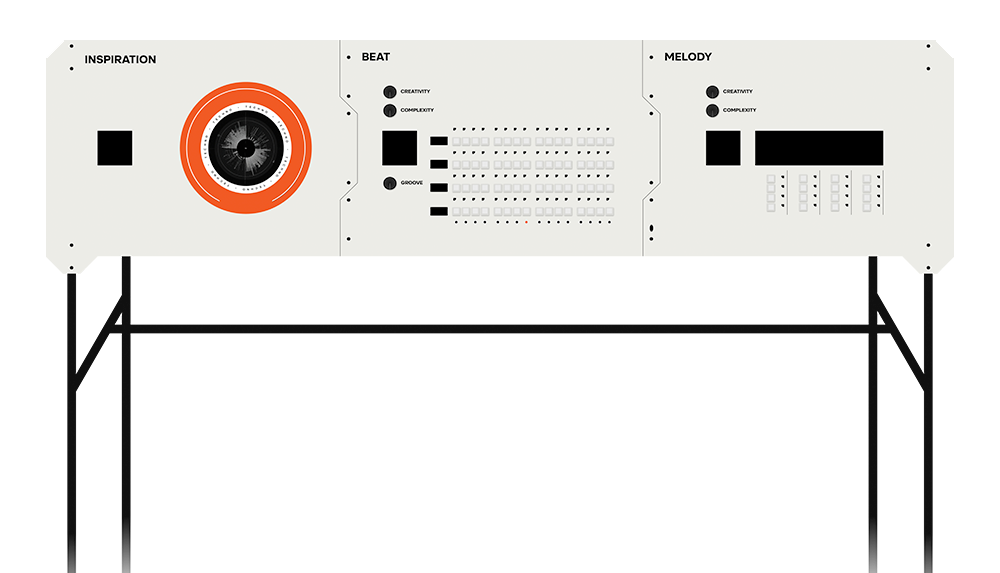

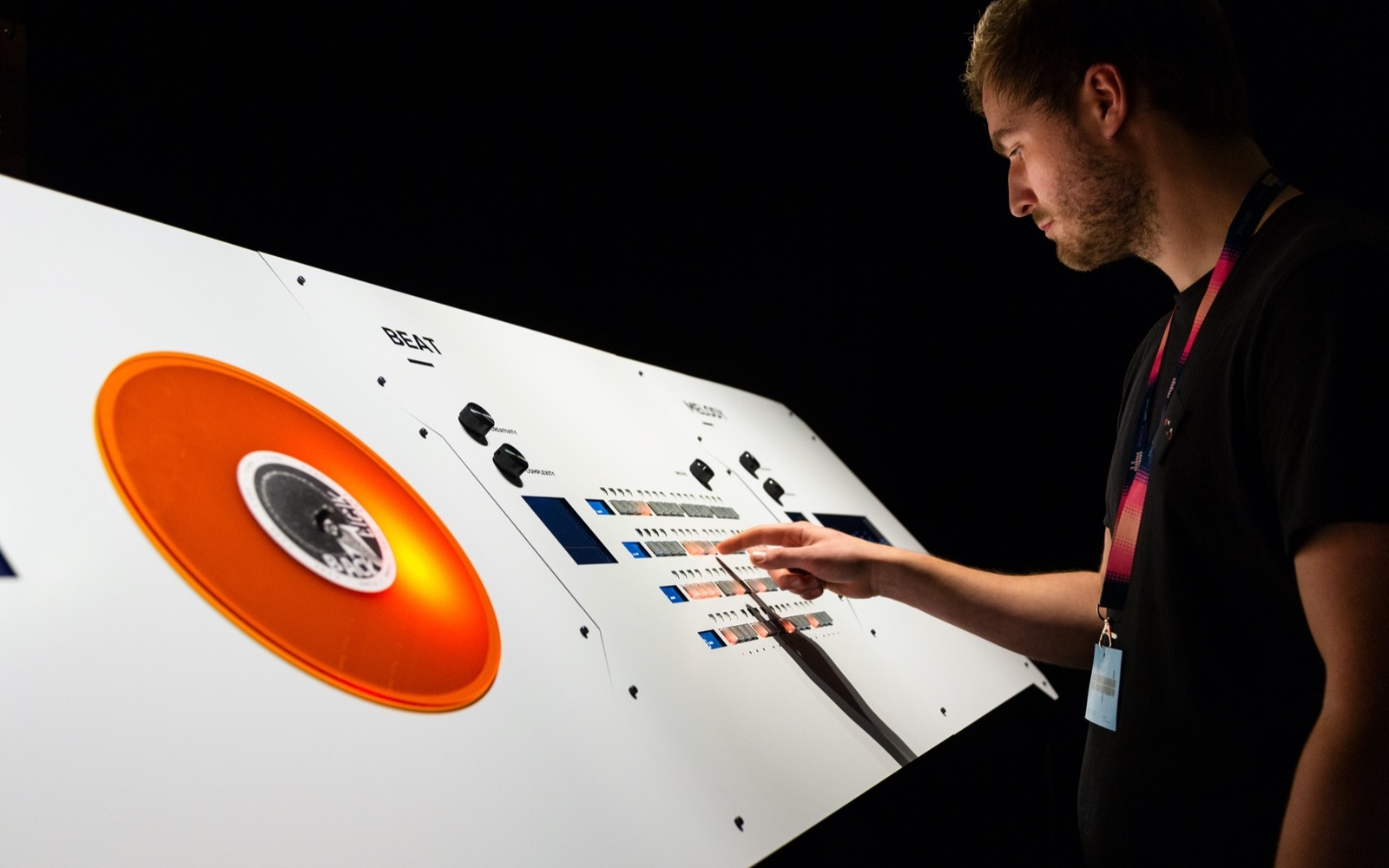

Created by Bureau Moeilijke Dingen, Anima is an interactive installation that facilitates a musical jam session between humans and machines. Operating much like a sequencer, Anima uses open-source AI algorithms to generate musical sequences, but with a unique twist. Anima not only allows you to make changes, but it also serves as a musician in its own right: it is an equal and active participant in the jam-session. Anima is able to bring in and overwrite sequences, variations and transitions that you can adapt and build on. Through interaction and interplay, anyone – alone or with multiple people – can freely experiment with musical ideas and boundaries, as if they were jamming in a band.

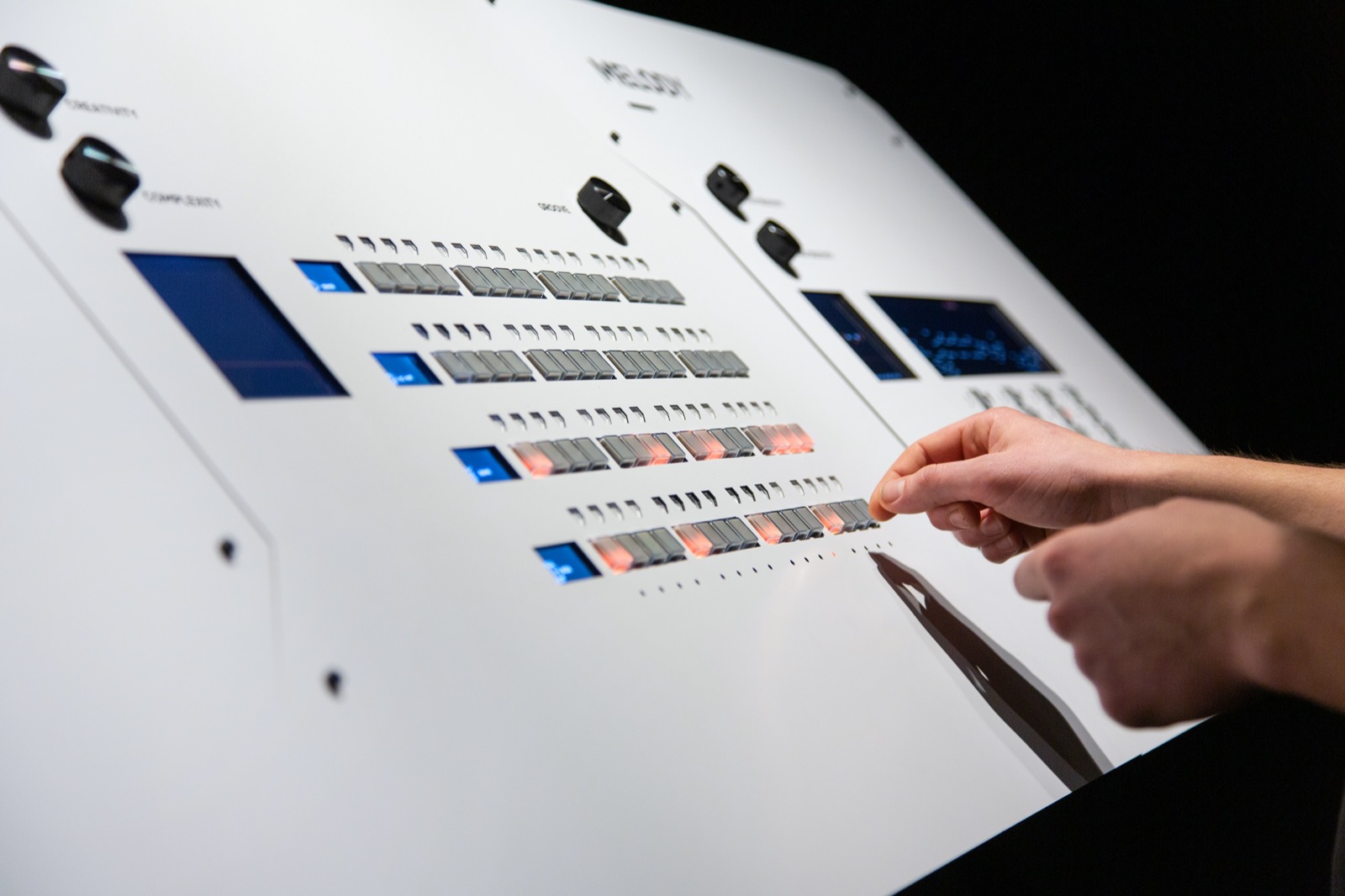

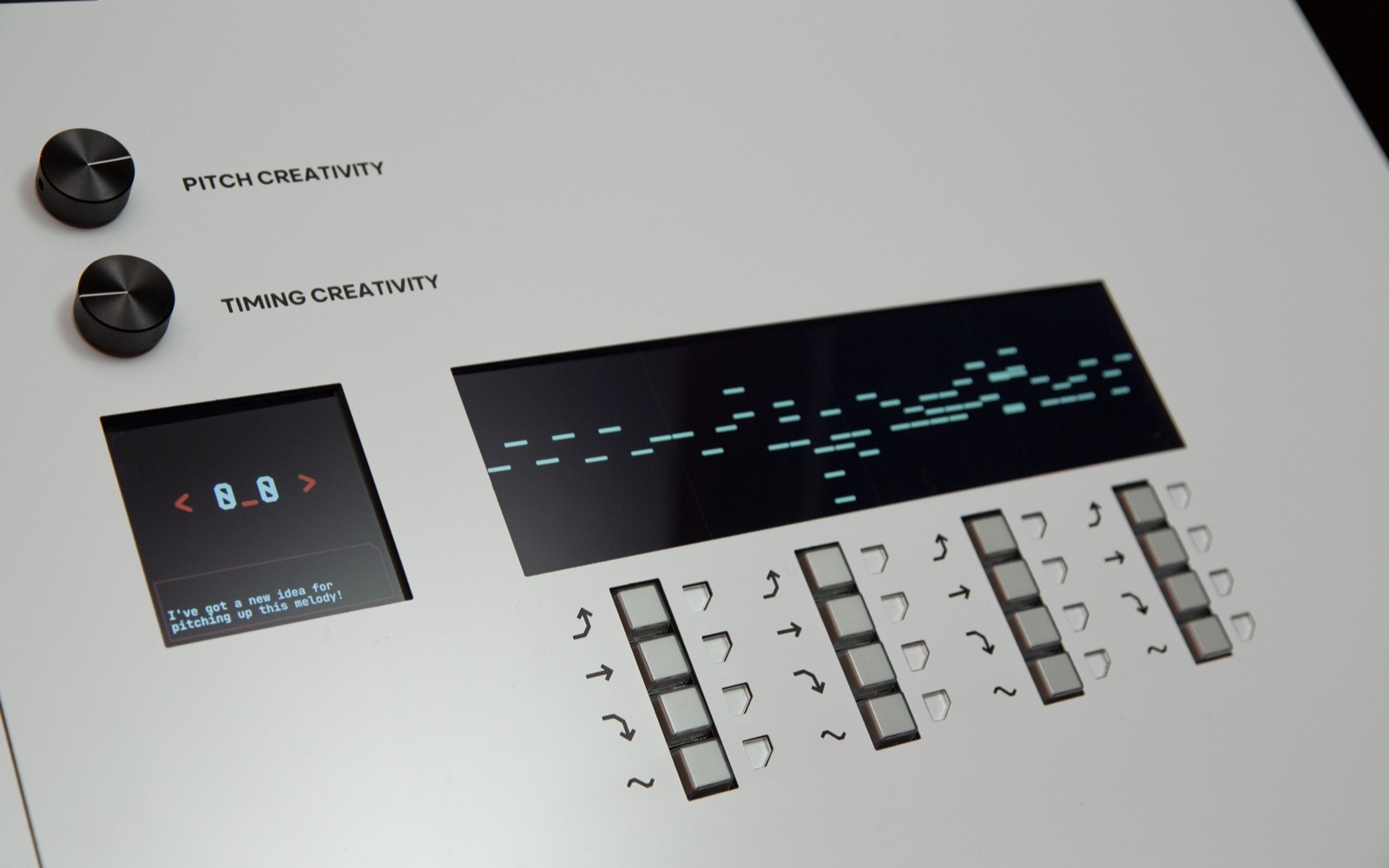

Place a disk with your desired musical genre on the inspiration module to start the jam session. Based upon the genre, Anima generates a beat and melody in the two corresponding modules, laying a foundation for the jam session. Now it is time to jam! You can manually enter a simple drum patterns (in beat), and give instructions (in melody) to the AI-algorithm to change the melody per measure. Given the low threshold of the installation’s interaction modalities, it allows anyone – especially non-musicians – to reflect on the current composition and decide what changes they would like to make. Anima proposes its new sequences through indicators, and through a virtual Tamagotchi-like character that reacts to the user’s input. You can influence Anima’s musical sequences by adjusting the AI parameters. These will tune the algorithm to generate more creative, complex and/or groovy melodies and rhythms.

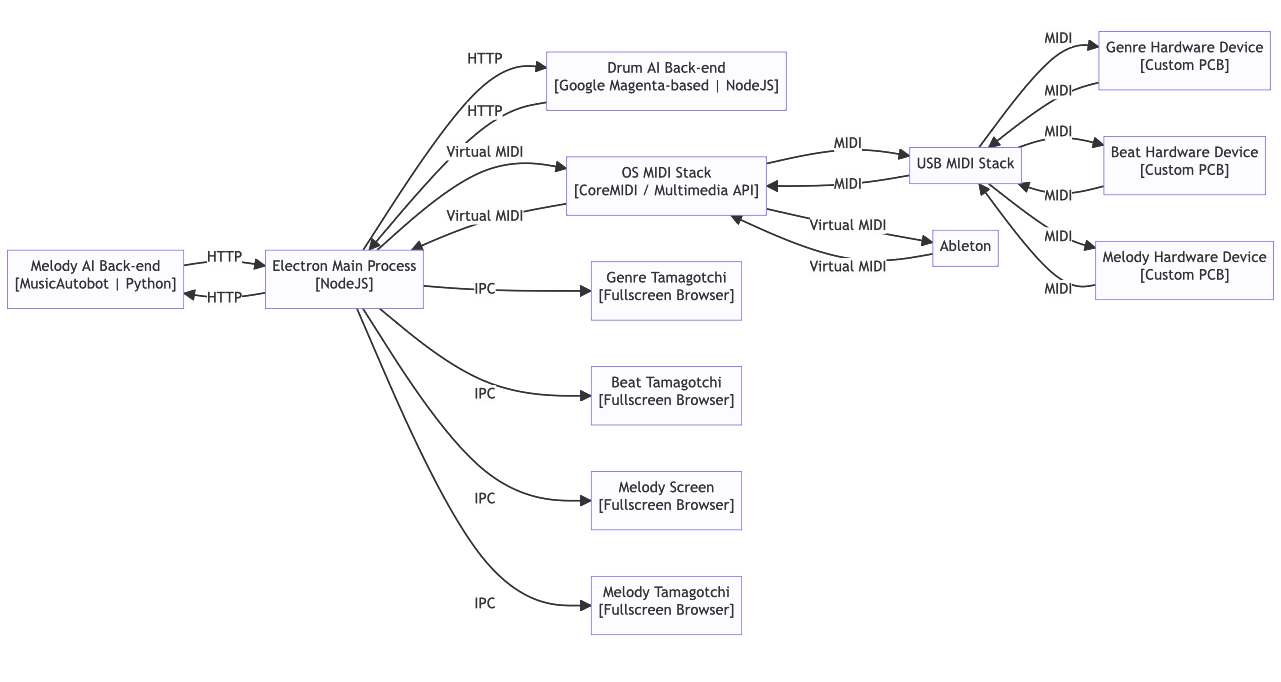

The installation’s architecture is built around a central PC that handles AI tasks, displays, coordination and audio processing, as well as a number of custom hardware units that handle user inputs and outputs, as well as the smaller screens in the rhythm section. The central brains of the operation is an Electron (NodeJS) process on the PC that coordinates all individual parts of the application, as well as keeps state in Redux. It is connected to three individual PCBs with Teensy’s (Genre selection, Beat I/O and Melody I/O, respectively) over USB. The boards send out MIDI Control Changes in response to user inputs (keyboard keys, potentiometers or genre discs). These are received by the main process, which sends out MIDI Control Changes to illuminate different LEDs and show general state.

The main process is the arbiter for current rhythm and melody MIDI sequences, and keeps those in memory. Each genre has a set of chords that starts the sequence. When a genre is selected, requests are sent out to two local HTTP back-ends that host the rhythm and melody models, respectively. These requests return with MIDI sequences based on the starting chords and are different each time a request is made. For the duration of the jam, the main process will send out requests for variations on current MIDI sequences. Such a requests can be made based on human input (melody) or by internal decision (both melody and rhythm). Each time a request is made, the current sequence is sent as an input, thus recursively iterating on an existing sequence. In order to inform the user about the installation’s progress in the jam, they’ve installed four LCD screens in the body. Apart from the one that visualises the current melody, three screens feature an animated assistant (Tamagotchi) that encourages and informs the user through text and visuals. All windows are Electron browser windows that use React to display its contents. They receive the current state from the main process via inter-process communication. Lastly, there is a debugger window that helps us see the application state and tune it.

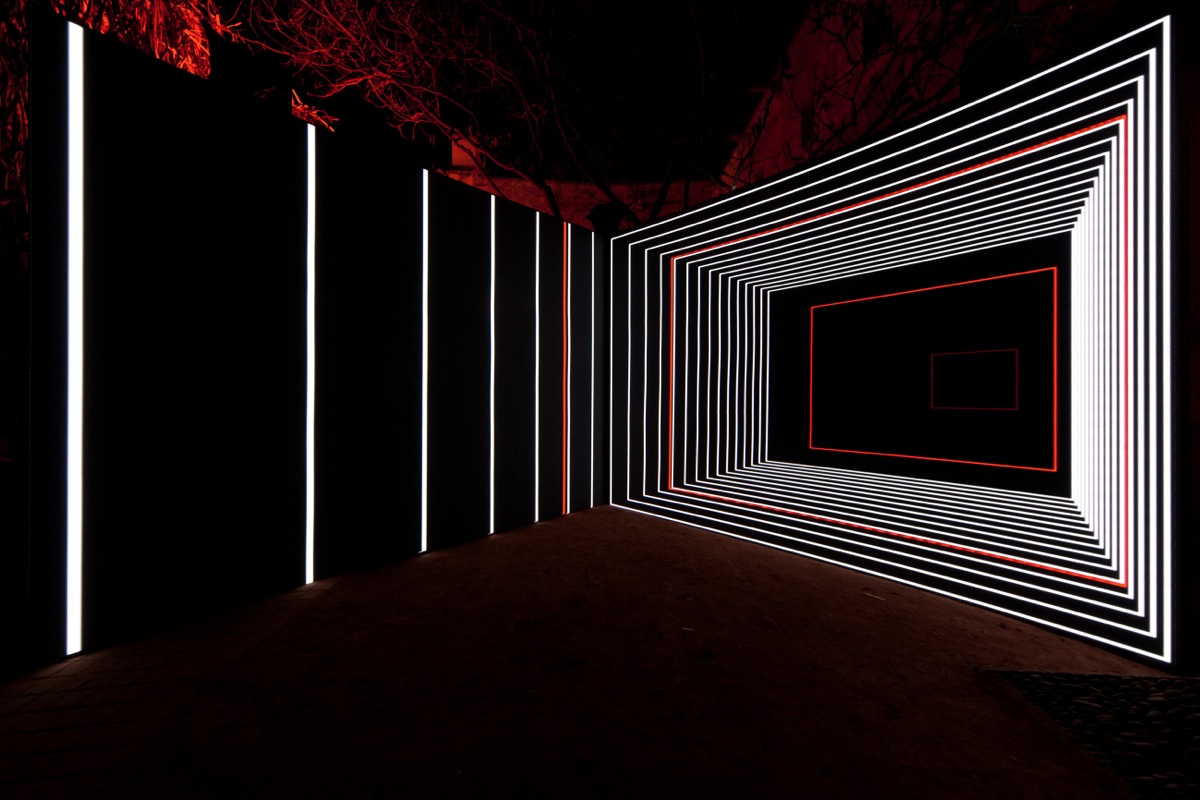

The main process is also responsible for playing back the current MIDI sequence in memory. This output is played over a virtual MIDI bus, which is received by Ableton. Ableton in turn sends out a MIDI clock back to the application which they use to keep time. The virtual MIDI bus contains musical sequences over three channels (rhythm, melody, chords) as well as a separate bus for control changes (e.g. BPM, start/stop, switch genres). Each genre has a separate set of channels and instruments that are directly controlled by the MIDI coming in from the main process. Audio is routed via a general line-out to a headphone amplifier and a stereo speaker setup. The team have also included a number of LEDs bar that help visualise the music as it’s happening. For this, they send out ArtNet datagrams over a network interface to a custom controller. This controller contains state-of-the-art microprocessing to be able to handle multiple 120 FPS streams to multiple controllers over a single Ethernet interface. It was developed in-house in collaboration with Amsterdam-based Lumus instruments, who graciously provided the LED bars as well.

As for the AI models used, they combine several in different contexts. As for rhythm generation, they build on the Magenta Studio models that are created by Google AI. As for melody generation they build upon the MusicAutoBot project created by Andrew Shaw. For rhythm generation, they rely on three TensorFlow models in the Magenta Studio suite. The first, Continue, creates variations on an already existing drum rhythm. It is a recurrent neural network that, based on a temperature setting, can extend that sequence by up to 32 measures. Similarly, Generate is based on the same model, but employs a Variational Auto encoder to generate a drum rhythm from a melody. They use this at the start of a new jam to generate a rhythm from scratch. Both models are trained on a corpus of millions of audio and MIDI sequences (e.g. Bach Doodle, CocoChorales, MAESTRO and NSynth). Lastly, they use Groove as a way of slightly shifting timing so that the rhythm sequences feel more human, according to a temperature setting. It is a recurrent neural network that has been trained on a corpus of MIDI sequences that have been played by human drummers (e.g. GMD, E-GMD and own data recorded by the Google AI Team).

For melody generation, they rely on MusicAutobot, a Multitask Transformer model that generates MIDI melody sequences. It is built on a number of state-of-the-art language models (TransformerXL, Seq2Seq and BERT). These models are built to fill in blanks in existing sequences of tokens (nominally natural language) and are thus quite well suited to predicting a melody based on a set of input chords. They use this model to both generate an initial melody, and for generating parts of an existing melody through masking. A set of temperature controls helps us steer the output in a general direction. MusicAutoBot is trained on a corpus of public MIDI libraries (e.g. Classical Archives, HookTheory and Lakh).

For more information about the project, visit moeilijkedingen.nl/cases/anima

Project Page | Bureau Moeilijke Dingen